FastVLM: Apple's Extremely Fast Vision Language Model

Runs directly on iPhone, first token output up to 85x faster!

How Does FastVLM Work?

Image Understanding

Token Conversion

Language Generation

FastVLM efficiently understands image content, converts it into compact tokens, and then uses these tokens to quickly generate accurate text descriptions or answers for real-time AI applications.

Why Choose FastVLM? Core Advantages

Extreme Speed Performance

Astonishing first token output speed! FastVLM-0.5B is 85x faster than LLaVA-OneVision. FastVLM-7B (with Qwen2) is 7.9x faster than Cambrian-1-8B (at similar accuracy).

Compact & Efficient Design

Small model size, easier deployment. FastVLM-0.5B is 3.4x smaller than LLaVA-OneVision. Ideal for on-device use like iPhone, iPad, Mac.

On-Device AI Intelligence

No cloud dependency, runs directly on your Apple device, protecting privacy and responding faster.

Perfectly adapted to the iOS/Mac ecosystem, empowering edge AI applications.

FastVLM Examples Showcase - Real AI Demonstrations

How many fingers am I holding up?

Respond using a single number. If no hand is present, respond with 0.

Object Counting AI

What is written in this image?

Output only the text in the image.

Handwriting Recognition AI

Describe the image in English.

Output should be brief, about 15 words or less.

Emoji Understanding AI

FastVLM Performance Comparison - Speed Benchmarks

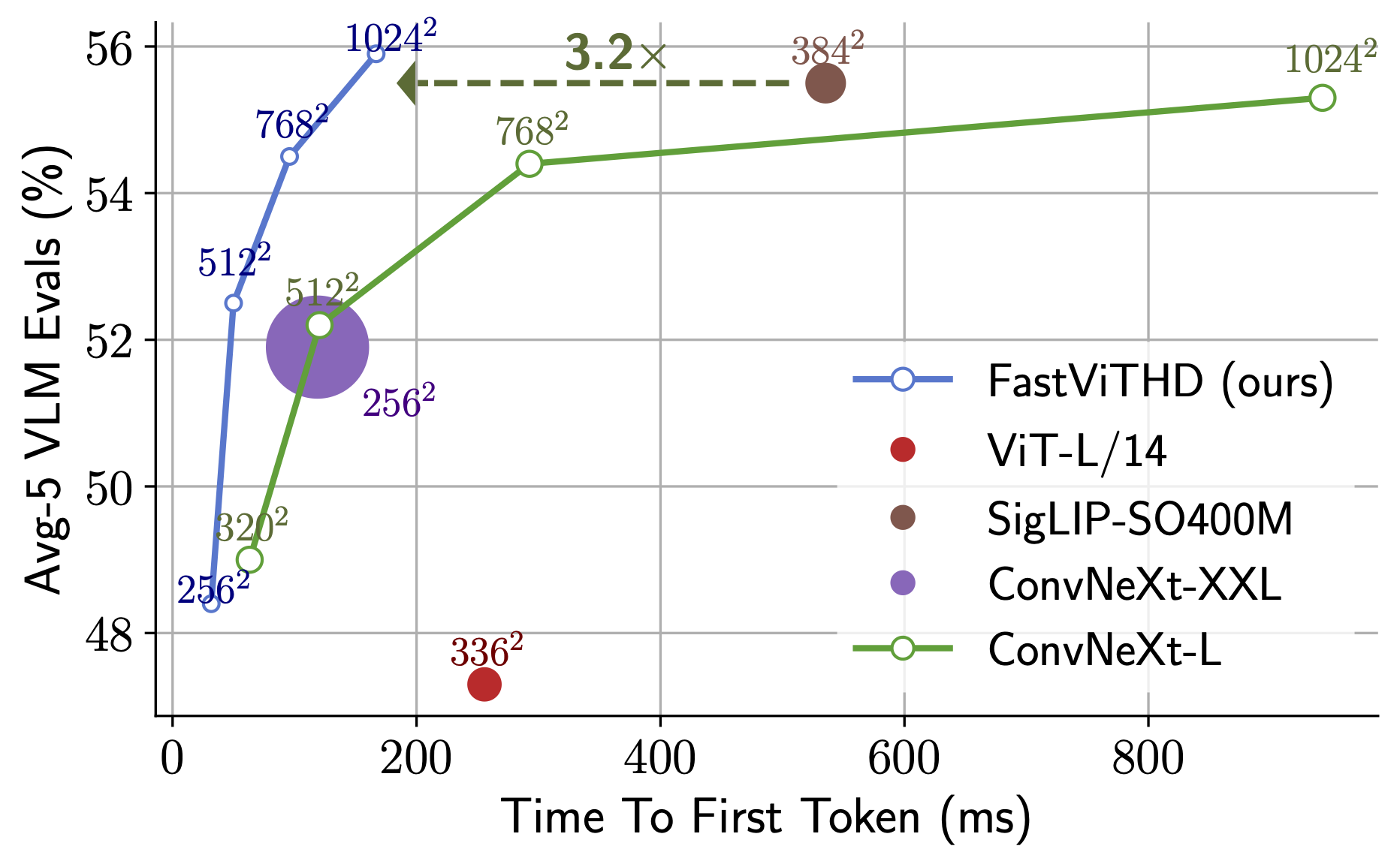

FastVLM achieves superior accuracy with significantly lower latency compared to other vision language models.

Time to First Token (ms) vs Average VLM Eval Score (%)

FastVLM Application Scenarios - AI Use Cases

AI Image Captioning

Automatically generate vivid and accurate text descriptions for images with advanced computer vision.

Visual Question Answering (VQA)

Understand image content and answer complex questions about the image using natural language processing.

AI Image Recognition & Analysis

Recognize objects, text, or data in images for intelligent analysis and machine learning applications.

Especially suitable for scenarios requiring real-time image and text interaction in mobile and edge computing environments.

Download FastVLM Models - PyTorch & Apple Silicon

PyTorch Model Checkpoints

| AI Model | Training Stage | Download Link |

|---|---|---|

| FastVLM-0.5B | 2 | fastvlm_0.5b_stage2 |

| FastVLM-0.5B | 3 | fastvlm_0.5b_stage3 |

| FastVLM-1.5B | 2 | fastvlm_1.5b_stage2 |

| FastVLM-1.5B | 3 | fastvlm_1.5b_stage3 |

| FastVLM-7B | 2 | fastvlm_7b_stage2 |

| FastVLM-7B | 3 | fastvlm_7b_stage3 |

Apple Silicon Compatible AI Models

For convenience running on Apple Silicon devices (iPhone, iPad, Mac), we provide models in pre-converted formats optimized for on-device AI:

Learn More About FastVLM - Research & Documentation

Explore the technical details of FastVLM, view the source code, or read the research paper to understand the breakthrough technology.

About FastVLM AI Technology

FastVLM: Apple's ultra-fast vision language model that runs directly on iPhone, with first token output up to 85x faster! Perfect for on-device AI applications and edge computing.